Memory Management

Managing the physical memory (DRAM) is a role of the operating system.

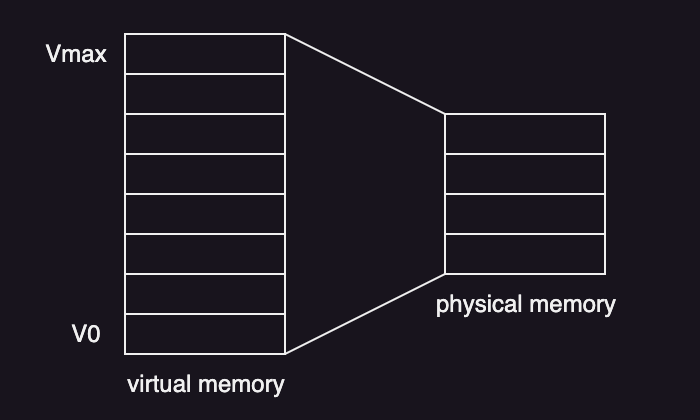

Virtual Memory vs Physical Memory

In order to provide flexibility in address space management—without being constrained by the amount of physical memory available or how it is shared among multiple processes—the operating system introduces the concept of virtual memory (also called logical memory).

Virtual memory allows each process to have a large, continuous virtual address space, independent of the actual physical memory installed in the system.

Here are several key benefits of using virtual memory:

- Efficient Multitasking: Multiple processes run concurrently in separate virtual spaces.

- Memory Isolation: Processes cannot access each other’s memory, enhancing security.

- Execution of Large Programs: Allows running programs larger than physical memory via paging or segmentation.

- Simplified Memory Management: Developers don’t need to worry about manual memory allocation as the OS handles it.

There are two approaches to implement virtual memory:

- Page-based memory management (Paging): The virtual address space is divided into fixed-size blocks called pages, which are mapped to corresponding frames in physical memory.

- Segment-based memory management (Segmentation): The address space is divided into variable-sized segments.

Although paging and segmentation can be used together, modern 64-bit systems primarily rely on paging, with segmentation supported mainly for backward compatibility. So, we’ll focus on page-based memory management.

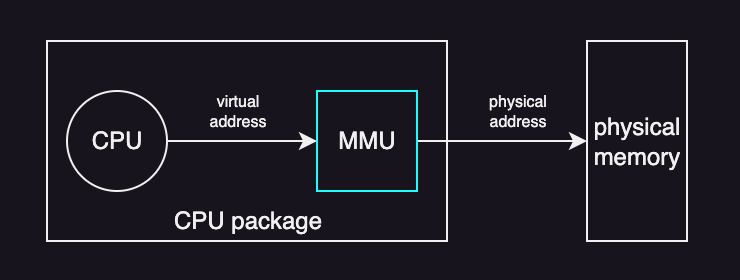

Hardware Support

Memory management isn’t purely handled by the operating system alone; it also relies heavily on hardware support. The primary hardware support for memory management is provided by the Memory Management Unit (MMU), which is integrated into modern CPUs.

The main responsibilities of the MMU include:

- Address Translation: The MMU translates virtual addresses (used by programs) into physical addresses (actual locations in the system’s DRAM).

- Page Fault Handling: The MMU detects and triggers page faults when a program attempts to access memory that is not currently mapped into physical memory. The MMU also checks for illegal access and permission violations.

In addition to the MMU, there are other hardware components that assist in memory management:

- Registers: Special registers in the MMU store information about the current state of memory management. For example, they can store the Page Table Base Register (PTBR) (which points to the page table), and Page Table Entry (PTE) registers.

- Cache - Translation Lookaside Buffer (TLB): To improve the performance of address translation, the MMU uses a small, high-speed cache called the Translation Lookaside Buffer (TLB), which stores recent virtual-to-physical address translations.

The key takeaway is that the actual translation from a virtual address to a physical address is performed by hardware, specifically by the Memory Management Unit (MMU). While the operating system sets up and manages the page tables, permissions, and overall memory policies, the MMU handles the translation process.

Page Tables

To translate virtual memory addresses into physical memory addresses, the Memory Management Unit (MMU) relies on page tables.

A page table acts as a “map” that allows the system to look up the corresponding physical address for a given virtual address.

Every process running on the system has its own page table. And during a context switch—when the operating system changes from running one process to another—it must update the reference to the current process’s page table. This update is managed by a hardware register that stores the address of the new page table, ensuring that the MMU always consults the correct mapping.

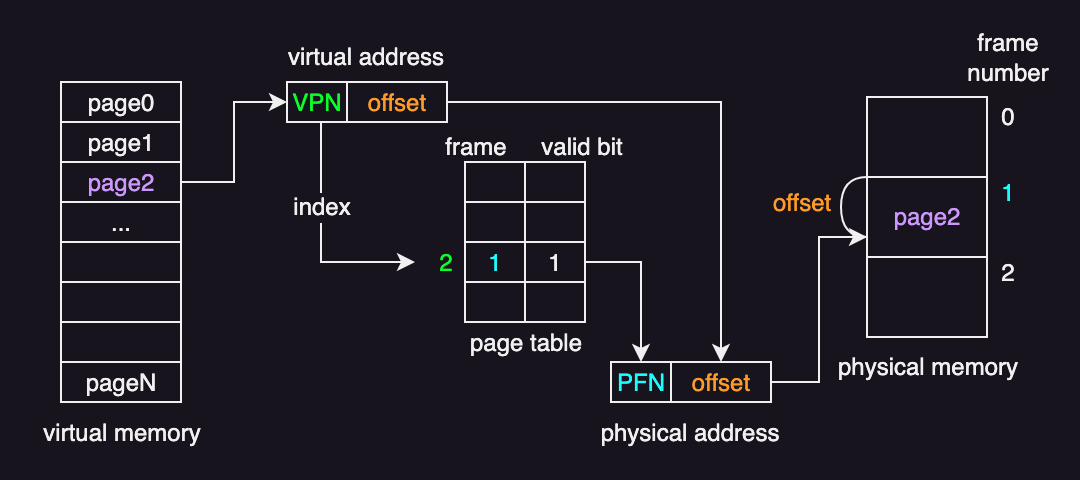

Note that virtual memory is divided into pages, and physical memory is divided into frames, both of equal size. This design means that instead of mapping every single virtual address (which would be highly inefficient), the page table maps whole pages to frames.

Page Table Entry

Each virtual address generated by the CPU is split into two parts: the Virtual Page Number (VPN) and the offset. The VPN identifies the particular virtual page, while the offset specifies the exact location within that page. When the CPU generates a virtual address, the MMU uses the VPN to index into the page table, retrieves the corresponding Physical Frame Number (PFN), and then appends the offset to get the complete physical address.

Another important aspect to consider is the concept of “allocation on first touch”. This means that the physical memory corresponding to an array (or any other data structure) is not allocated until the process actually accesses it for the first time. This strategy helps conserve memory resources because it avoids allocating memory for data structures that a program may never use.

Flags

Every page table entry contains more than just a frame number; it also includes a bit known as the valid bit (or valid-invalid bit). When the valid bit is set to 1, the page is legal and accessible; when it is 0, the page is illegal and any access attempt typically results in a page fault. This mechanism ensures that processes only use the memory allocated to them.

Besides the valid bit, page table entries often include several other bits that help manage memory efficiently. For example:

- dirty bit: indicates if a page has been modified, meaning it must be written back to disk before replacement.

- reference (access) bit: tracks if a page has been used recently, which aids in choosing pages for replacement.

- protection bit: specifying what types of operations—such as read, write, or execute—are allowed on that page.

Some systems include additional bits for caching control or special functions like copy-on-write, all of which help the operating system maintain security and performance.

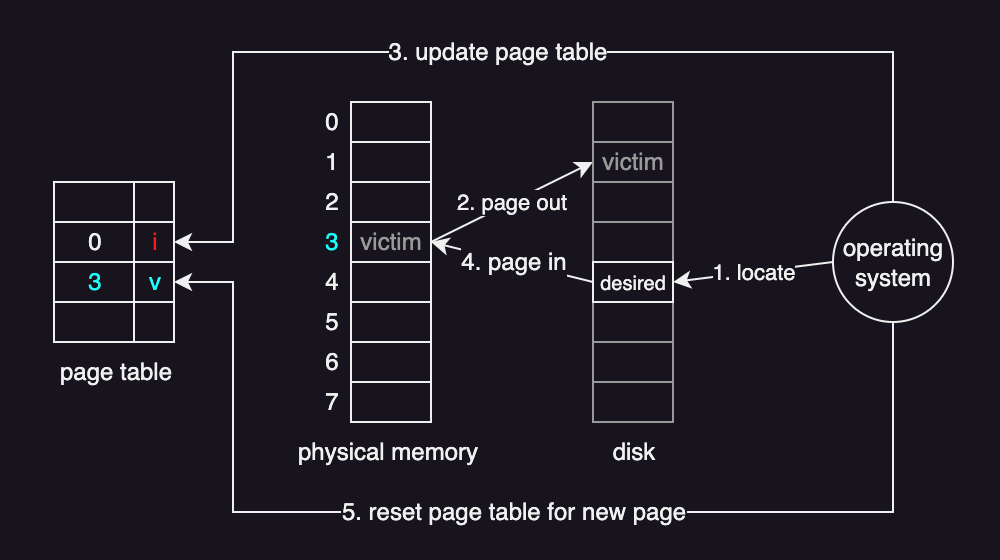

Page Fault

A page fault happens when a process tries to access a page that isn’t in main memory. When this occurs, the operating system’s page fault handler jumps into action. It determines why the page fault happened—whether the page simply needs to be loaded from disk or if the access is invalid. If the page is valid but not in memory, the handler locates it on disk, loads it into RAM, updates the page table, and then resumes the process’s execution.

Page Table Size

In Linux x86, the default page size is 4 KB, which is commonly used in memory management. However, the operating system also supports larger page sizes, such as 2 MB (large pages) and 1 GB (huge pages)

| Regular | Large | Huge | |

|---|---|---|---|

| Page Size | 4 KB | 2 MB | 1 GB |

| Offset bits | 12 | 21 | 30 |

Using larger pages have several advantages:

- Smaller page tables: Larger pages mean fewer page table entries (PTEs), reducing memory overhead and improving efficiency.

- Higher TLB hit rate: Since fewer pages are needed, the Translation Lookaside Buffer (TLB) can store more relevant page mappings, improving performance.

Larger pages also have drawbacks:

- Internal fragmentation: If a large page is not fully utilized, significant portions of it may remain unused, leading to wasted memory.

The default 4 KB page size remains the most commonly used because it strikes a balance between memory efficiency and performance.

Page Table Size in a Single-level Page Model

Consider a single-level page table model with pages that are typically 4 KB in size, which is a common configuration on systems like x86-64 and ARM.

A 32-bit system supports 2^32 virtual address space. If the page size is 4 KB (2^12 bytes), then a single page table can have up to 1 million page table entries (2^32)/(2^12). Since each page table entry (PTE) is 4 bytes, including PFN and flags, a process may then need a page table of up to 4 MB (1 million * 4 bytes).

Now, a 64-bit system vastly expands the address space to 2^64 bytes. Using the same 4 KB pages, a page table can have up to 4.5*10^15 pages (2^(64-12)). With the PTE now being 8 bytes, if you do the math, a process may need up to roughly 32 petabytes.

| 32-bit Architecture | 64-bit Architecture | |

|---|---|---|

| Page Size | 4 KB | 4 KB |

| Number of Pages | 2^32 / 2^12 = 2^20 ~= 1,048,576 pages | 2^64 / 2^12 = 2^52 ~= 4.5*10^15 pages |

| PTE Size | 4 bytes | 8 bytes |

| Page Table Size | ~= 4 MB (1,048,576 pages * 4 bytes) | ~= 32 PB (4.5*10^15 pages * 8 bytes) |

Obviously, allocating the page table contiguously in main memory has its limitations.

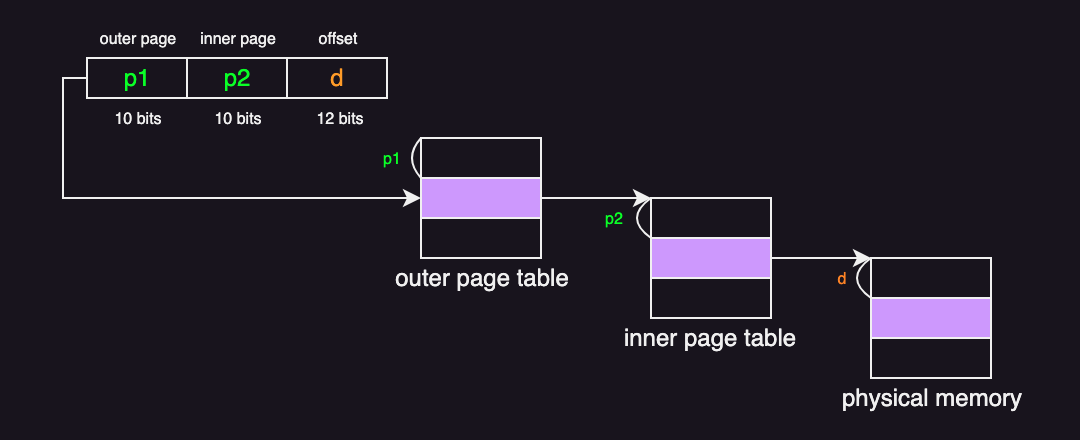

Multi-level Page Tables

Multi-level page tables are one solution to reduce the memory requirement for page tables. Instead of allocating a single, large page table for the entire address space, the page table is divided into multiple layers. This hierarchical structure allocates memory only for the portions of the address space that are actually used.

In a two-level page table, the system first consults an outer page table. This outer table doesn’t store the complete mapping details; instead, it contains pointers to inner page tables. Each inner page table is responsible for a specific region of the virtual address space. Importantly, an inner table is allocated only if that region is actively used. This lazy allocation saves memory by avoiding the creation of page tables for unused regions.

The following figure illustrates the address translation process for a two-level 32-bit paging architecture:

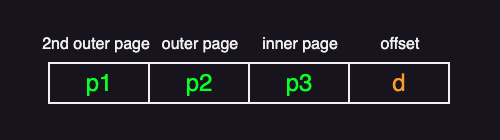

Adding more layers to the page table hierarchy can further reduce the size of the page tables. For example, a three-level page table divides the virtual address into three parts, where each part indexes into a different level of the page tables before reaching the final physical frame:

However, there’s a trade-off: increased translation latency. With each additional level in the page table hierarchy, the CPU must perform extra memory accesses to resolve a virtual address to its corresponding physical location.

In 64-bit systems with vast address spaces, even three or four levels might not be sufficient. In general, hierarchical page tables are not ideal for 64-bit architectures.

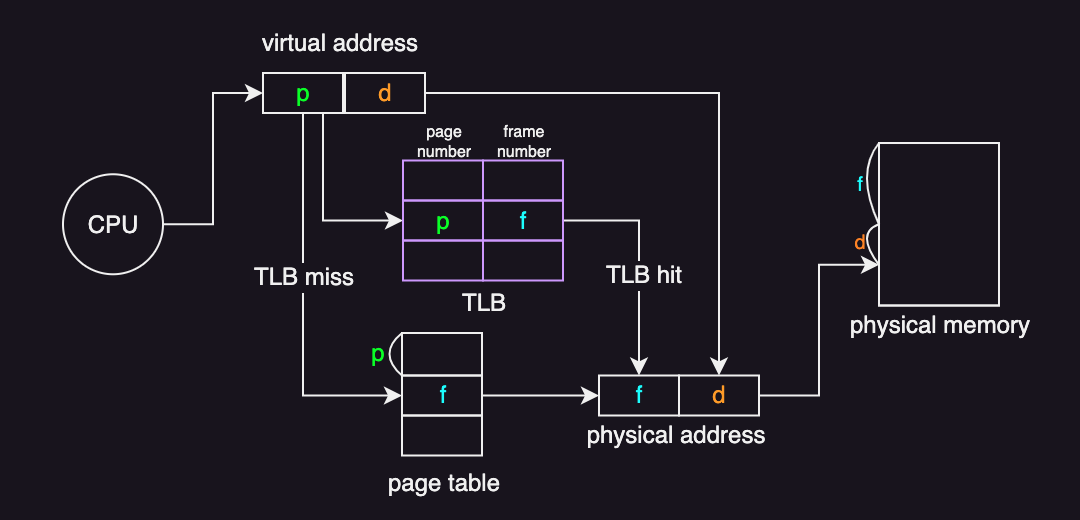

Optimizing Address Translation with TLB

As mentioned earlier, the more layers present in the page table hierarchy, the longer the address translation process takes. To speed up this process, most modern architectures incorporate a hardware cache called the Translation Lookaside Buffer (TLB). The TLB is a small, fast cache that stores a limited number of recent virtual-to-physical address mappings.

When a virtual address is accessed, the TLB is checked first. If the mapping is found in the TLB (a TLB hit), the translation is done quickly. If not (a TLB miss), the operating system has to perform a page table lookup, which takes more time.

To further speed up TLB lookups, replacement algorithms like Least Recently Used (LRU) or random replacement are often used. In fact, even a relatively small TLB can significantly improve performance due to the following properties of memory references:

- temporal locality: recently accessed addresses are likely to be accessed again soon

- spatial locality: addresses near those recently accessed are likely to be accessed next.

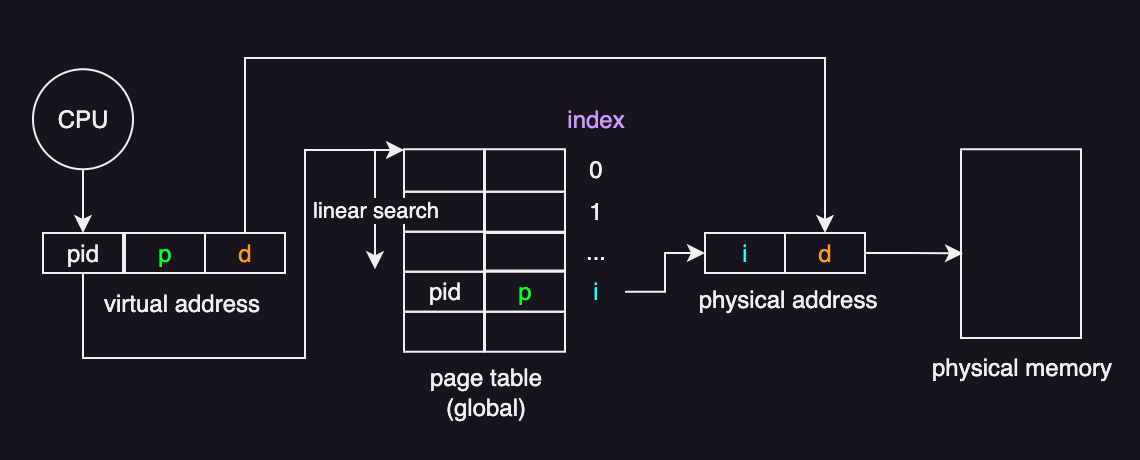

Inverted Page Tables

Inverted page tables can solve the traditional paging model’s drawback of having millions of entries per page table, which consume significant physical memory just for tracking usage. In an inverted page table, there is one entry for every physical frame rather than one entry for every virtual page of each process. This means that instead of each process having its own page table, there is a single, global page table that records information for every physical frame in memory.

Each entry in the inverted page table consists of a pair: a process identifier (pid) and a virtual page number (p).

When the system needs to translate a virtual address into a physical address, it searches the inverted table for the entry that matches both the process ID and the virtual page number.

Once it finds the correct entry, the index of that entry corresponds to the physical frame number.

By combining this frame number with the offset from the virtual address, the system can produce the complete physical address.

A challenge with this approach is that the inverted page table is organized by physical frames, but the lookup is performed using virtual addresses. This mismatch means that the system might have to search through many entries to find the correct one. In practice, however, the TLB helps alleviate this problem quite well.

Another approach to speed up the process of the linear search is to use a hash table.

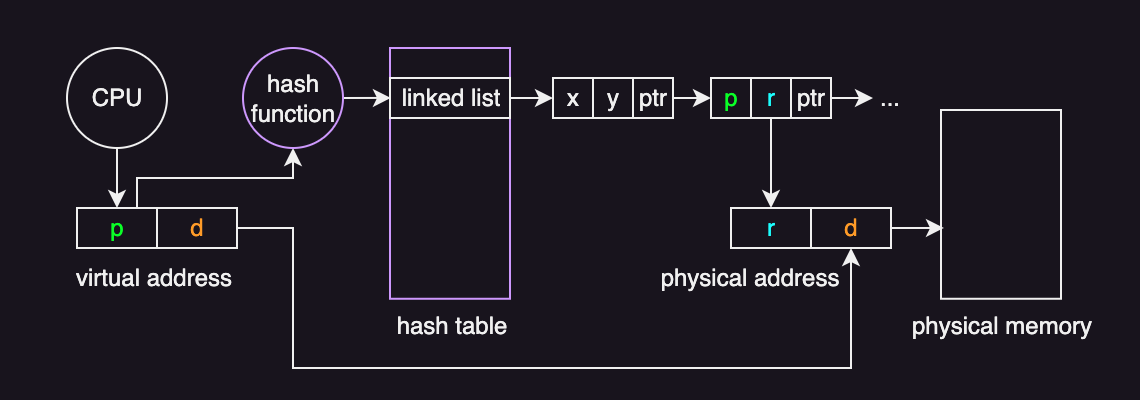

Hashed Page Tables

Hashed page tables are a common approach for handling expansive address spaces of 64-bit systems. In a hash table, each entry contains a linked list of elements that hash to the same location to handle hash collisions. (This technique is also known as chaining.) And each element consists of three fields:

- the virtual page number

- the page frame number

- a pointer to the next element in the linked list

The lookup algorithm works as follows:

- A hash function is used to hash the virtual page number to determine the corresponding entry in the hash table.

- The virtual page number is compared with the VPN in the first element in the linked list. If there is a match, the corresponding page frame number is used to form the complete physical address.

- If there is no match, the remaining entries in the linked list are sequentially searched until a matching VPN is found.

This design significantly reduces the search time, often limiting the lookup to just one or a few entries.

Memory Allocation

Deciding how much memory is allocated to a process and which specific portion of memory is assigned is primarily handled by the memory management subsystem (a part of the OS kernel) of the operating system.

Memory allocation occurs at both the kernel level and the user level, each with distinct responsibilities.

Kernel-level memory allocators are responsible for:

- Allocating memory for the kernel itself – various components of the kernel require memory for their operation.

- Providing static memory for processes – this includes sections like code and stack when a process is created.

- Managing free memory – keeping track of available pages in the system.

User-level allocators handle is responsible for:

- Dynamic memory allocation during process execution - this includes managing the heap through functions such as

malloc()andfree().

The Linux kernel, for example, uses two strategies for managing free memory: the buddy allocation and slap allocation.

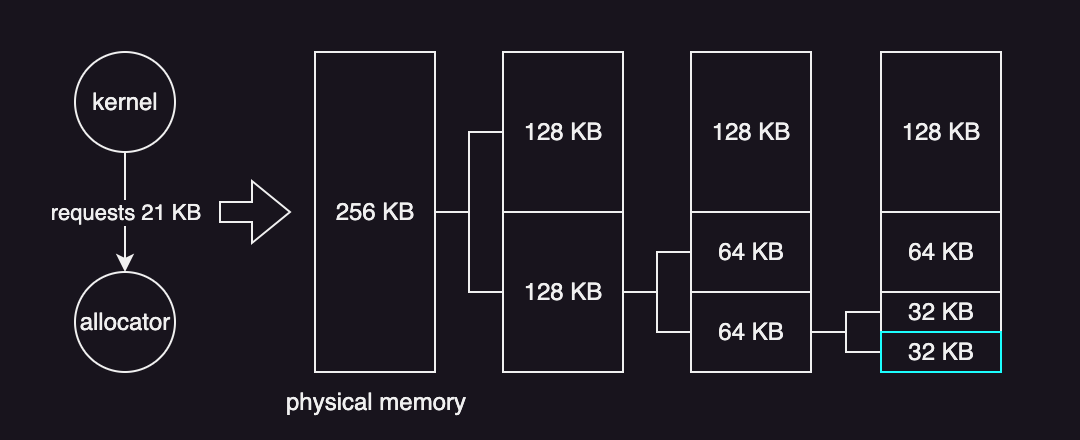

Buddy Allocation

The buddy allocator manages memory by dividing it into blocks of different sizes, always in powers of two. When a block of memory is requested, the allocator finds the smallest “buddy” block that fits the request. If no block of the required size is available, it splits a larger block into two “buddies” until the desired size is achieved.

An advantage of this strategy is its ability to quickly merge adjacent buddies into larger segments, a process known as coalescing. However, the obvious drawback is that it can still cause internal fragmentation.

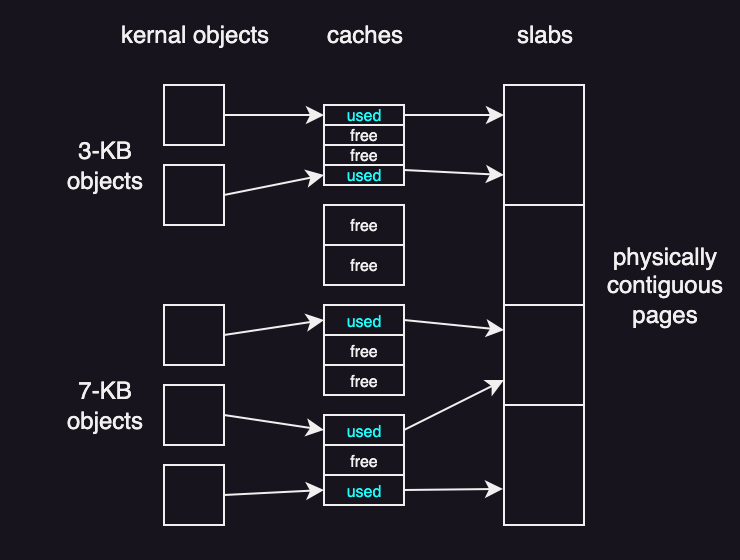

Slab Allocation

A slab consists of one or more physically contiguous pages, and a cache consists of one or more slabs. The slab allocator pre-creates caches for the different kernel data structures. For example:

- a cache for the process descriptors

- a cache for file objects

- a cache for semaphores

When a cache is first created, all objects are marked as free.

When a new object for a particular kernel data structure is requested, the allocator assigns an available free object from the corresponding cache.

Once the object is assigned, it is marked as used.

The slab allocator offers two main advantages:

- No fragmentation: Each kernel data structure has a dedicated cache, divided into slabs that match the object size.

- Quick memory allocation: Memory requests are quickly satisfied because objects are pre-allocated in the cache.

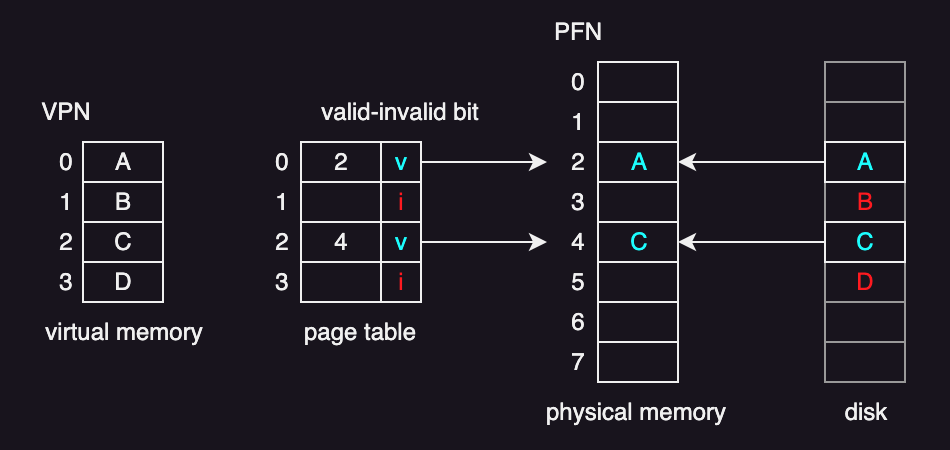

Demand Paging

Loading an entire program from disk into physical memory at execution time can be inefficient, as not all parts of the program may be immediately required. Demand paging is a commonly used technique in which pages are only loaded into physical memory when they are actually needed. With demand paging, pages are swapped between physical memory and a secondary storage device, such as a disk containing the swap partition.

Basically, the hardware uses a valid-invalid bit within each page table entry to distinguish between pages currently in memory and those stored on disk. The meaning of this bit is as follows:

- valid: The page is legal and currently resides in physical memory.

- invalid: The page is either:

- Not valid (not within the logical address space of the process), or

- Valid but currently stored on disk (not loaded into memory).

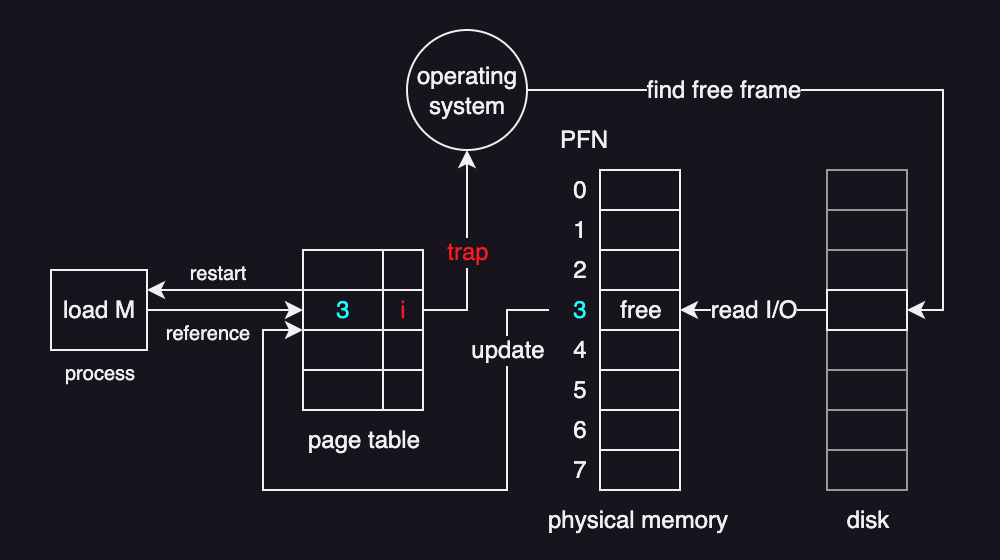

Procedure for handling a page fault:

- The MMU detects an invalid or missing page reference and triggers a trap to the OS kernel.

- The OS checks the internal tables (associated with the process control block):

- If the reference is invalid, terminate the process.

- If valid but the page isn’t in memory, continue to step 3.

- The OS selects a free memory frame for the required page.

- The OS schedules an I/O operation to load the page from disk into the allocated frame.

- After loading completes, update page tables and process information to mark the page as present.

- Return control to the interrupted process, the program counter will be restarted with the same intstructions.

When a page is “pinned”, it is locked into physical memory and cannot be swapped out. Pinning is useful when pages contain critical kernel operations, I/O buffers, or data that must remain in memory to ensure system stability and performance.

Page Replacement

Since memory is finite, the system must decide which existing page to replace when a page fault (a page is not currently in physical memory) occurs and memory is full. Page replacement is the process used to decide which pages to swap out of memory when a new page needs to be loaded.

Basic page replacement process:

- Locate the desired page on the disk.

- Select a victim page to be replaced using a page-replacement algorithm and write it back to disk. (page-out)

- Update the page table by setting the valid-invalid bit to invalid.

- Load the desired page into the freed frame. (page-in)

- Reset the page table to reflect the change.

For “when” to swap out pages, the operating system will run some page out daemon when the amount of occupied memory reaches a particular threshold. Two kinds of thresholds are:

- high watermark: when memory usage is above threshold

- low watermark: when CPU usage is below

For “which” to swap out pages, the operating system should predict based on algorithms such as:

- LRU (Least Recently Used): The page that has not been used for the longest period of time is replaced.

- FIFO (First-In-First-Out): The oldest page is replaced.

- Optimal: The page that will not be used for the longest time in the future is replaced (though this is difficult to implement in practice).

While each algorithm has its trade-offs, the goal is to minimize the number of page faults and keep the system running efficiently by managing memory effectively.

Copy-on-Write

Copy-on-Write (COW) is an optimization technique used to efficiently handle process creation, particularly in the fork() system call.

Instead of immediately duplicating the entire address space of a process, the parent and child initially share the same memory pages as read-only.

This is possible because many pages are static and don’t change.

When either process attempts to write to a shared page, the MMU detects the write operation on a protected page and triggers a page fault. The operating system then creates the actual copy of that page for the modifying process. This lazy copying reduces memory usage and improves performance by copying pages only when necessary.

Note that COW relies on MMU support to manage page protection and fault handling efficiently.